Manual: Computational Anatomy Toolbox CAT

Introduction and overview

Introduction

The brain is the most complex organ of the human body, and no two brains are alike. The study of the human brain is still in its infancy, yet rapid advances in image acquisition and processing have enabled increasingly refined characterisations of its micro- and macro-structure. Major efforts focus on mapping group differences (e.g., young vs. old, diseased vs. healthy, male vs. female), capturing changes over time (e.g., from infancy to old age, or in the framework of neuroplasticity after a clinical intervention), and assessing correlations of brain attributes (e.g., measures of length, volume, shape) with behavioural, cognitive, or clinical parameters.

CAT is a powerful suite of tools for morphometric analyses with an intuitive graphical user interface, and it can also be used as a shell script. CAT is suitable for beginners, casual users, experts, and developers alike, providing a comprehensive set of analysis options, workflows, and integrated pipelines.

The available analysis streams support voxel-, surface-, and region-based morphometric analyses. Importantly, CAT includes various quality control options and covers the entire analysis workflow, from cross-sectional or longitudinal data processing to statistical analysis and visualisation of results.

Overview of the manual

This manual is intended to help users perform a computational anatomy analysis using the CAT Toolbox. Although it mainly focuses on voxel-based morphometry (VBM), other variants of computational analysis such as deformation-based morphometry (DBM), surface-based morphometry (SBM), and region of interest (ROI) morphometric analyses are also presented and can be applied with few changes.

The manual can be divided into four main sections:- First, a quick guide on how to get started is provided. This section covers downloading and installing the software and starting the Toolbox, and includes a brief overview of the steps of a VBM analysis.

- This is followed by a detailed description of a basic VBM analysis that guides you step by step through the entire process—from preprocessing to contrast selection. This description should provide the information needed to analyse most studies.

- Specific cases of VBM analyses require adapting the basic workflow. These cases include longitudinal studies and studies in children or special patient populations. Relevant changes to a basic VBM analysis, and how to apply them, are described here. Only the changes are described; steps such as quality control or smoothing are not repeated.

- The guide concludes with additional information about spaces after registration, naming conventions, and other hints.

Quick start guide

VBM data

- Segment data using defaults (use Segment Longitudinal Data for longitudinal data).

The resulting segmentations that can now be used for VBM are saved in the "mri" folder and are named "mwp1" for grey matter and "mwp2" for white matter. If you have used the longitudinal pipeline, the default segmentations for grey matter are named "mwp1r" or "mwmwp1r" if the longitudinal model for detecting larger changes was selected. - Estimate total intracranial volume (TIV) to correct for different brain sizes and volumes.

Select the XML files that are saved in the "report" folder. - Check data quality with Sample Homogeneity for VBM data (optionally include TIV and age as nuisance variables).

Select the grey or white matter segmentations from the first step. - Smooth data (recommended starting value: 6 mm1).

Select the grey or white matter segmentations from the first step. - Specify the Basic Models with the smoothed grey or white matter segmentations and check design orthogonality and sample homogeneity:

- Select "Two-sample t-test" or "Multiple regression", or use "Full factorial" for any cross-sectional data.

- Use "Flexible factorial" for longitudinal data.

- Use TIV as a covariate (confound) to correct different brain sizes.

- Select threshold masking with an absolute value of 0.1. You can increase this to 0.2 or even 0.25 if you still notice non-brain areas in your analysis.

- If you observe a considerable correlation between TIV and any other parameter of interest, it is advisable to use global scaling with TIV. For more information, refer to Orthogonality.

- Estimate the model and then call Results.

- Optionally Transform and Threshold SPM-maps to (log-scaled) p-maps or correlation maps.

- Optionally, try Threshold-Free Cluster Enhancement (TFCE) with the SPM.mat file of a previously estimated statistical design.

- Optionally Overlay Selected Slices. If you are using log-p scaled maps from "Transform SPM-maps" without thresholds or the TFCE_log maps, use the following values as the lower limit for the colormap: 1.3 (P<0.05); 2 (P<0.01); 3 (P<0.001).

- Optionally use Surface Overlay for visualisation of your results. Select the results (preferably saved as log-p maps with "Transform SPM-maps" or the TFCE_log maps with the different methods for multiple comparison correction) to display rendering views, slice overlay, and a glassbrain of your results.

- Optionally estimate results for ROI analysis using Analyze ROIs. Here, the SPM.mat file of the already estimated statistical design will be used. For more information, see Atlas creation and ROI-based analysis.

Additional surface data

- Segment data and select "Surface and thickness estimation" under "Writing options" (for longitudinal data use Segment Longitudinal Data).

The surface data are saved in the folder "surf" and are named "?h.thickness.*" for cortical thickness. - Optionally, Extract Additional Surface Parameters (e.g. sulcal depth, gyrification, cortical complexity).

- Resample and smooth surface data (suggested starting value 12 mm for cortical thickness and sulcal depth and 20–25 mm for folding measures1, use the default merging of hemispheres).

Select the "lh.thickness.*" data in the folder "surf". The resampled data are named "s12.mesh.resampled_32k.thickness.*" for 12 mm smoothed, merged hemispheres that were resampled to 32k template space. - Check data quality of the resampled data using Sample Homogeneity.

- Build Basic Models for the resampled data and check design orthogonality and sample homogeneity.

- Select "Two-sample t-test" or "Multiple regression" or use "Full factorial" for any cross-sectional data.

- Use "Flexible factorial" for longitudinal data.

- TIV is not necessary as a covariate (confound) because cortical thickness or other surface values are usually not dependent on TIV.

- No threshold masking is needed.

- If you find a considerable correlation between a nuisance parameter and any other parameter of interest, it is advisable to use global scaling with that parameter. For more information, refer to Orthogonality.

- Estimate the surface model and finally call Results.

- Optionally Transform and Threshold SPM-maps to (log-scaled) p-maps or correlation maps.

- Optionally, you can try Threshold-Free Cluster Enhancement (TFCE) with the SPM.mat file of a previously estimated statistical design.

- Optionally use Surface Overlay for visualisation of your results. Select the results (preferably saved as log-p maps with "Transform SPM-maps" or the TFCE_log maps with the different methods for multiple comparison correction) for the merged hemispheres to display rendering views of your results.

- Optionally Extract ROI-based Surface Values such as gyrification or fractal dimension to provide ROI analysis. Since version 12.7 extraction of ROI-based thickness is not necessary anymore because this is now included in the segmentation pipeline.

- Optionally estimate results for ROI analysis using Analyze ROIs. Here, the SPM.mat file of the already estimated statistical design will be used. For more information, see Atlas creation and ROI-based analysis.

Additional options

Additional parameters and options are displayed in the CAT expert mode. Please note that this mode is for experienced users only.

Errors during preprocessing

Please use the Report Error function if any errors occurred during preprocessing. You first have to select the "err" directory, which is located in the folder of the failed record, and finally, the specified zip file should be attached manually in the mail.

1Note to filter sizes for Gaussian smoothing

Smoothing increases the signal-to-noise ratio. The matched filter theorem states that the filter that matches your signal maximizes the signal-to-noise ratio. If we expect signal with a Gaussian shape and a FWHM of, say 6 mm in our images, then this signal will be best detected after we smooth our images with a 6 mm FWHM Gaussian filter. If you are trying to study small areas like the amygdala, we can use smaller filter sizes because of the small size of these regions. For larger regions like the cerebellum, the effects are better detected with larger filters.

Please also note that for the analysis of cortical folding measures such as gyrification or cortical complexity the filter sizes have to be larger (i.e. in the range of 15–25 mm). This is due to the underlying nature of this measure that reflects contributions from both sulci as well as gyri. Therefore, the filter size should exceed the distance between a gyral crown and a sulcal fundus.

Version information

Preprocessing should remain unaffected until the next minor version number (12.x). New processing of your data is not necessary if the minor version number of CAT remains unchanged.

Changes in version CAT26.0 (3010)

Changes in preprocessing pipeline (which affect your results compared to CAT12.9)

- The cortical surface extraction pipeline has been substantially revised, resulting in faster execution and more robust cortical thickness estimation. The initial surface reconstruction now employs an improved Marching Cubes implementation that directly produces topology-correct surfaces, thereby avoiding downstream topology correction issues.

- The VBM pipeline has been comprehensively updated to improve stability and robustness, particularly for challenging acquisitions such as MP2RAGE. These improvements also enhance the accuracy and reliability of the initial affine registration.

- The quality assessment framework has been updated according to the methods described in Dahnke et al., 2024. The former IQR metric has been replaced by SIQR.

- A new versioning scheme has been introduced, aligned with the year-based system adopted in recent SPM releases. In contrast to SPM, only the release year is used, followed by semantic versioning. The new series therefore starts with CAT26.0.

-

Consistent with the updated naming convention, the toolbox directory within

spm/toolboxhas been renamed fromcat12toCAT.

New features

- A new batch has been added under "Volume Tools" > "Rescan Average" to average rescans either in BIDS format or from manually selected images. This can be used, for example, to minimize motion artifacts. The functionality is already available directly from the menu.

- To run SPM ImCalc on multiple images (e.g., to compute T1w/T2w ratios for all subjects), use the batch available under "Volume Tools" > "Multi-Subject Image Calculator".

- The output of the CAT preprocessing is now additionally consolidated into a single CSV file. The corresponding batch extracts information from the preprocessing XML files and is available under "Data Quality" > "...".

Changes in version CAT12.9 (2550)

Changes in preprocessing pipeline (which affect your results compared to CAT12.8.2)

- The cortical surface extraction has been extensively updated with many changes that result in more reliable thickness measurements and better handling of partial volume effects.

- Initial affine registration now uses a brain mask to obtain more reliable estimates, resulting in more accurate non-linear spatial registration.

- Various smaller changes have been made for blood vessel detection and background detection for skull-stripping.

- Segment Longitudinal Data is now saving both plasticity and ageing models by default.

- The previous cat_vol_sanlm from r1980 has been rescued and renamed to cat_vol_sanlm2180.m, which performs significantly better.

- The quartic mean Z-score is now used to assess sample homogeneity as it gives greater weight to outliers and makes them easier to identify in the plot.

- Several changes have been made to support Octave compatibility.

Changes in version CAT12.8.2 (2130)

Important new features

- The CAT manual is now converted to HTML and merged with the online help into a single HTML file with interactive MATLAB links that can be called in MATLAB.

- Added new thalamic nuclei atlas.

- Moved some rarely used CAT options to expert mode.

- Added many new options to visualise 3D (VBM) results (e.g. new glass brain).

- The tool to check Sample Homogeneity has many new options and is now based on calculation of Z-score which is much faster for larger samples.

- New Basic Models to more easily define cross-sectional and longitudinal statistical designs. Some unnecessary options have been removed compared to "Basic Models" in SPM and some useful options have been added.

Changes in version CAT12.8.1 (1975)

Changes in preprocessing pipeline (which affect your results compared to CAT12.8)

- The longitudinal pipeline has been largely updated and is no longer compatible with preprocessing with version 12.8:

- The estimate of subject-specific TPM should now be more stable and less sensitive to changes between time points.

- For data where the brain/head is still growing between time points and major changes are expected, a new longitudinal development model was added. For this type of data, an adapted pipeline was created that is very similar to the longitudinal ageing model, but uses a time point-independent affine registration to adjust for brain/head growth. In addition, this model uses a subject-specific TPM based on the average image.

- An additional longitudinal report is now provided to better assess differences between time points.

Changes in version CAT12.8 (1830)

Changes in preprocessing pipeline (which affect your results compared to CAT12.7)

- Volumetric templates, atlases, and TPMs are now transformed to MNI152NLin2009cAsym space to better match existing standards. The templates_volume folder is now renamed to ''templates_MNI152NLin2009cAsym'' to indicate the template space used. The Dartel and Geodesic Shooting templates are renamed or relocated:

- templates_volumes/Template_0_IXI555_MNI152_GS.nii → templates_MNI152NLin2009cAsym/Template_0_GS.nii

- templates_volumes/Template_1_IXI555_MNI152.nii → templates_MNI152NLin2009cAsym/Template_1_Dartel.nii

- templates_volumes/TPM_Age11.5.nii → templates_MNI152NLin2009cAsym/TPM_Age11.5.nii

- templates_volumes/Template_T1_IXI555_MNI152_GS.nii → templates_MNI152NLin2009cAsym/Template_T1.nii

- spm12/toolbox/FieldMap/T1.nii → templates_MNI152NLin2009cAsym/T1.nii

- spm12/toolbox/FieldMap/brainmask.nii → templates_MNI152NLin2009cAsym/brainmask.nii

- The volumetric atlases have been revised and are now defined with a spatial resolution of 1mm, except for the Cobra atlas, which is defined with 0.6mm resolution. The labels of the original atlases were either transformed from the original data or recreated using a maximum likelihood approach when manual labels were available for all subjects (Cobra, LPBA40, IBSR, Hammers, Neuromorphometrics). In addition, the original labels are now used for all atlases if possible. Some atlases were updated to include new regions (Julichbrain, Hammers) and a new atlas of thalamic nuclei was added. Please note that this will also result in slight differences in ROI estimates compared to previous versions.

- The bounding box of the Dartel and Geodesic Shooting templates has been changed, resulting in a slightly different image size of the spatially registered images (i.e. modulated normalized segmentations). Therefore, older preprocessed data should not (and cannot) be mixed with the new processed data (which is intended).

- Transformed T1 Dartel/GS surface templates to the new MNI152NLin2009cAsym space:

- templates_surfaces/lh.central.Template_T1_IXI555_MNI152_GS.gii → templates_surfaces/lh.central.Template_T1.gii

- templates_surfaces/rh.central.Template_T1_IXI555_MNI152_GS.gii → templates_surfaces/rh.central.Template_T1.gii

- templates_surfaces_32k/lh.central.Template_T1_IXI555_MNI152_GS.gii → templates_surfaces_32k/lh.central.Template_T1.gii

- templates_surfaces_32k/rh.central.Template_T1_IXI555_MNI152_GS.gii → templates_surfaces_32k/rh.central.Template_T1.gii

- The surface pipeline has been optimized to better handle data at different spatial resolutions.

- Older preprocessing pipelines (12.1, 12.3, 12.6) were removed because their support became too difficult.

Important new features

- The Mahalanobis distance in the Quality Check is now replaced by the normalized ratio between overall weighted image quality (IQR) and mean correlation. A low ratio indicates good quality before and after preprocessing and means that IQR is highly rated (resulting in a low nominal number/grade) and/or mean correlation is high. This is hopefully a more intuitive measure to combine image quality measurement before and after preprocessing.

- CAT now allows the use of the BIDS directory structure for storing data (not possible for the longitudinal pipeline). A BIDS path can be defined relative to the participant level directory. The segmentation module now supports the input of nii.gz files (not possible for the longitudinal pipeline).

- The Basic Models function has been completely restructured and simplified. There are now only two models available for: (1) cross-sectional data and (2) longitudinal data. Options that are not relevant for VBM or SBM have been removed. In addition, a new experimental option has been added that allows a voxel-wise covariate to be defined. This can be used (depending on the contrast defined) to (1) remove the nuisance effect of structural data (e.g. GM) on functional data or (2) examine the relationship (regression) between functional and structural data. Additionally, an interaction can be modeled to investigate whether the regression between functional and structural data differs between two groups. Please note that the saved vSPM.mat file can only be evaluated with the TFCE toolbox.

- Added a new function cat_io_data2mat.m to save spatially registered volume or resampled surface data as Matlab data matrix for further use with machine learning tools. Volume data can be resampled to lower spatial resolutions and can optionally be masked to remove non-brain areas.

- Added a new function cat_vol_ROI_summarize.m to summarise co-registered volume data within a region of interest (ROI). This tool can be used to estimate ROI information for other (co-registered) modalities (i.e. DTI, (rs)fMRI), which can also be defined as 4D data. Several predefined summary functions are available, as well as the possibility to define your own function.

- Added a new function cat_stat_quality_measures.m to estimate and save quality measures for very large samples.

- Added standalone tools for de-facing, DICOM import, and estimating and saving quality measures for large samples.

Changes in version CAT12.7 (1700)

Changes in preprocessing pipeline (which affect your results compared to CAT12.6)

- Geodesic shooting registration and surface estimation are now used by default.

- The surface pipeline is largely updated: (1) Parameters for surface reconstruction were optimized. (2) FreeSurfer distance method Tfs is now implemented, which is computed as the average of the closest distances from the pial to the white matter surface and from the white matter to the pial surface. This reduces the occurrence of larger thickness values and results in more reliable thickness measures. For mapping of 3D data the old thickness metric from PBT is more appropriate and is still used.

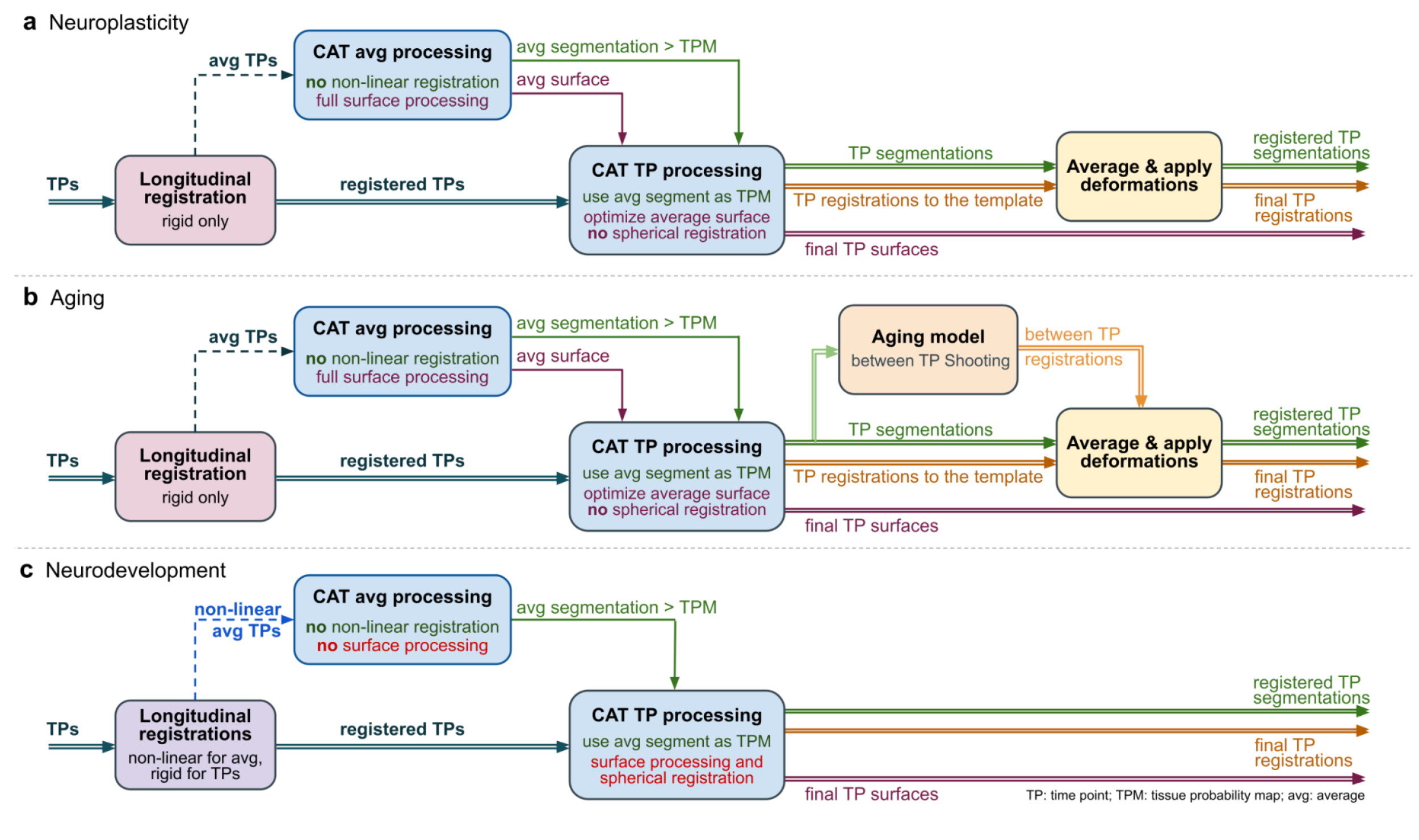

- An additional longitudinal model is implemented that also takes into account deformations between time points. The use of deformations between the time points makes it possible to estimate and detect larger changes, while subtle effects over shorter periods of time in the range of weeks or a few months can be better detected with the model for small changes.

- Minor changes were made to the segmentation approach to improve accuracy and reliability.

- Internal resampling is now using a new ''optimal'' resolution setting to better support high-resolution data.

- Changed recommendation and defaults for smoothing size to smaller values.

- Renamed template folder for volumes to templates_volumes.

- Atlases installed in spm12/atlas are now called cat12_atlasname.

- The old AAL atlas has been replaced by the AAL3 atlas.

- Cobra atlas is updated because of some previous inconsistencies.

Important new features

- New GUI

- Added older preprocessing pipelines (12.1, 12.3, 12.6) to provide compatible versions to previous preprocessing. These older preprocessing pipelines are available via the SPM batch editor (SPM → Tools → CAT) or through expert mode.

- Added simple batch for cross-sectional and longitudinal data that combines several processing steps from preprocessing to smoothing. These batches are available via the SPM batch editor(SPM → Tools → CAT) or through expert mode.

- The function Display surface results can now also visualise results from VBM analysis and map the 3D volume information onto the surface using an absmax mapping function inside the cortical band. The function is now renamed to ''Surface Overlay''.

- You can call Results now from the CAT GUI with some new functionality for surfaces and the option to call TFCE results.

- CAT now uses Piwik for anonymized CAT user statistics (i.e. version information, potential errors). See CAT user statistics for more information. This can optionally be disabled in cat_defaults.m.

- The extraction of ROI-based thickness is no longer necessary because this is now included in the segmentation pipeline in cat_main.m.

- Changed gifti-format after resampling to use external dat-files. This increases processing speed and prevents the SPM.mat file for surfaces from becoming too large. This can optionally be disabled in cat_defaults.m.

- The use of own atlases for ROI processing is now supported.

- Updated and extended examples for statistical designs and respective contrasts in the CAT manual.

Changes in version CAT12.6 (1445)

Changes in preprocessing pipeline (which affect your results compared to CAT12.5)

- Two main parts of the preprocessing of CAT were largely updated: (1) Incorrect estimates of the initial affine registration were found to be critical for all subsequent preprocessing steps and mainly concerned skull-stripping and tissue segmentation. This was a particular problem in the brains of older people or children, where the thickness of the skull differs from that of the template. The new estimate of the initial affine registration should now be more robust. In the CAT report, the registered contour of the skull and the brain is now overlaid onto the image to allow for easier quality control. (2) Skull-stripping now uses a new adaptive probability region-growing (APRG) approach, which should also be more robust. APRG refines the probability maps of the SPM approach by region-growing techniques of the gcut approach with a final surface-based optimization strategy. This is currently the method with the most accurate and reliable results.

- The longitudinal pipeline should now also be more sensitive for the detection of effects over longer time periods with VBM (ROI and SBM approaches are not affected by the length of the period). In earlier versions, the average image was used to estimate the spatial registration parameters for all time points. Sometimes this average image was not as accurate if the images of a subject were too different (e.g. due to large ventricular changes). Now, we rather use the average spatial registration parameters (i.e. deformations) of all time points, which makes the approach more robust for longer periods of time. However, the SPM Longitudinal Toolbox can be a good alternative for longer periods of time if you want to analyse your data voxel by voxel. Surface-based preprocessing and also the ROI estimates in CAT are not affected by the potentially lower sensitivity to larger changes, as the realigned images are used independently to create cortical surfaces, thickness, or ROI estimates.

Important new features

- CAT report now additionally plots the contour of the registered skull and brain onto the image and visualises skull-stripping. Display surface results is largely updated.

- Parallelization options in CAT now enable subsequent batch jobs and are also supported for longitudinal preprocessing.

Changes in version CAT12.5 (1355)

Changes in preprocessing pipeline (which affect your results compared to CAT12.3)

- Detection of white matter hyperintensities (WMHs) is updated and again enabled by default.

- The default internal interpolation setting is now "Fixed 1 mm" and offers a good trade-off between optimal quality and preprocessing time and memory demands. Standard structural data with a voxel resolution around 1 mm or even data with high in-plane resolution and large slice thickness (e.g. 0.5x0.5x1.5 mm) will benefit from this setting. If you have higher native resolutions the highres option "Fixed 0.8 mm" will sometimes offer slightly better preprocessing quality with an increase of preprocessing time and memory demands.

Important new features

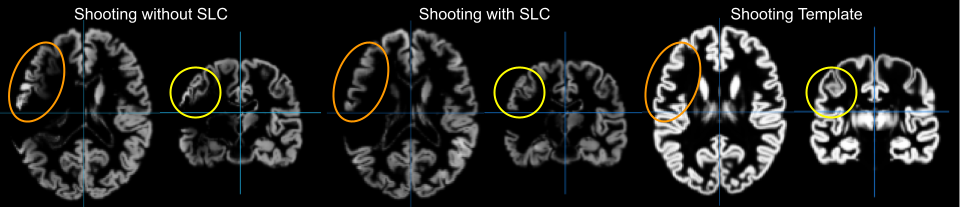

- CAT can now deal with lesions that have to be set to zero in your image using the Stroke Lesion Correction (SLC) in expert mode. These lesion areas are not used for segmentation or spatial registration, thus these preprocessing steps should be almost unaffected.

Changes in version CAT12.4 (1342)

- This version had some severe errors in spatial registration which affected all spatially registered data and should not be used anymore.

Changes in version CAT12.3 (1310)

Changes in preprocessing pipeline (which affect your results compared to CAT12.2)

- Skull-stripping is again slightly changed and the SPM approach is now used as default. The SPM approach works quite well for the majority of data. However, in some rare cases, parts of GM (i.e. in the frontal lobe) might be cut. If this happens the GCUT approach is a good alternative.

- Spatial adaptive non-local mean (SANLM) filter is again called as a very first step because noise estimation and de-noising works best for original (non-interpolated) data.

- Detection of white matter hyperintensities (WMHs) is currently disabled by default, because of unreliable results for some data.

Important new features

- Cobra atlas has been largely extended and updated.

Changes in version CAT12.2 (1290)

Changes in preprocessing pipeline (which affect your results compared to CAT12.1)

- Skull-stripping now additionally uses SPM segmentations by default: The default gcut approach in CAT12.1 removed too much of the surrounding (extracranial) CSF, which led to a slight underestimation of TIV for atrophied brains. The skull-stripping approach based on the SPM segmentations prevents this through a more conservative approach. However, sometimes parts of the meninges (i.e. dura mater) or other non-brain parts remain in the GM segmentation. By combining both approaches a more reliable skull-stripping is achieved.

- More reliable estimation of TIV: The changed skull-stripping also affects the estimation of TIV, which is now more reliable, especially for atrophied brains.

Important new features

- Automatic check for design orthogonality and sample homogeneity using SPM.mat in Basic Models

- Added equi-volume model by Bok and a multi-save option for mapping native volumes to individual surfaces.

- Added Local-Global Intrinsic Functional Connectivity parcellation by Schaefer et al. for resting-state fMRI data.

Getting started

Download and installation

- The CAT Toolbox runs within SPM. That is, SPM must be installed and added to your Matlab search path before the CAT Toolbox can be installed (see the SPM site and SPM Wiki for more help).

- Download and unzip the CAT Toolbox. You will get a folder named "CAT", which contains various Matlab files and compiled scripts. Copy the folder "CAT" into the SPM "toolbox" folder.

- On newer macOS systems (10.15 or higher: Catalina, Big Sur, Monterey, Ventura) you may need to follow the SPM installation instructions to prevent issues with MEX files.

- Older CAT versions can be found here.

Starting the toolbox

- Start Matlab

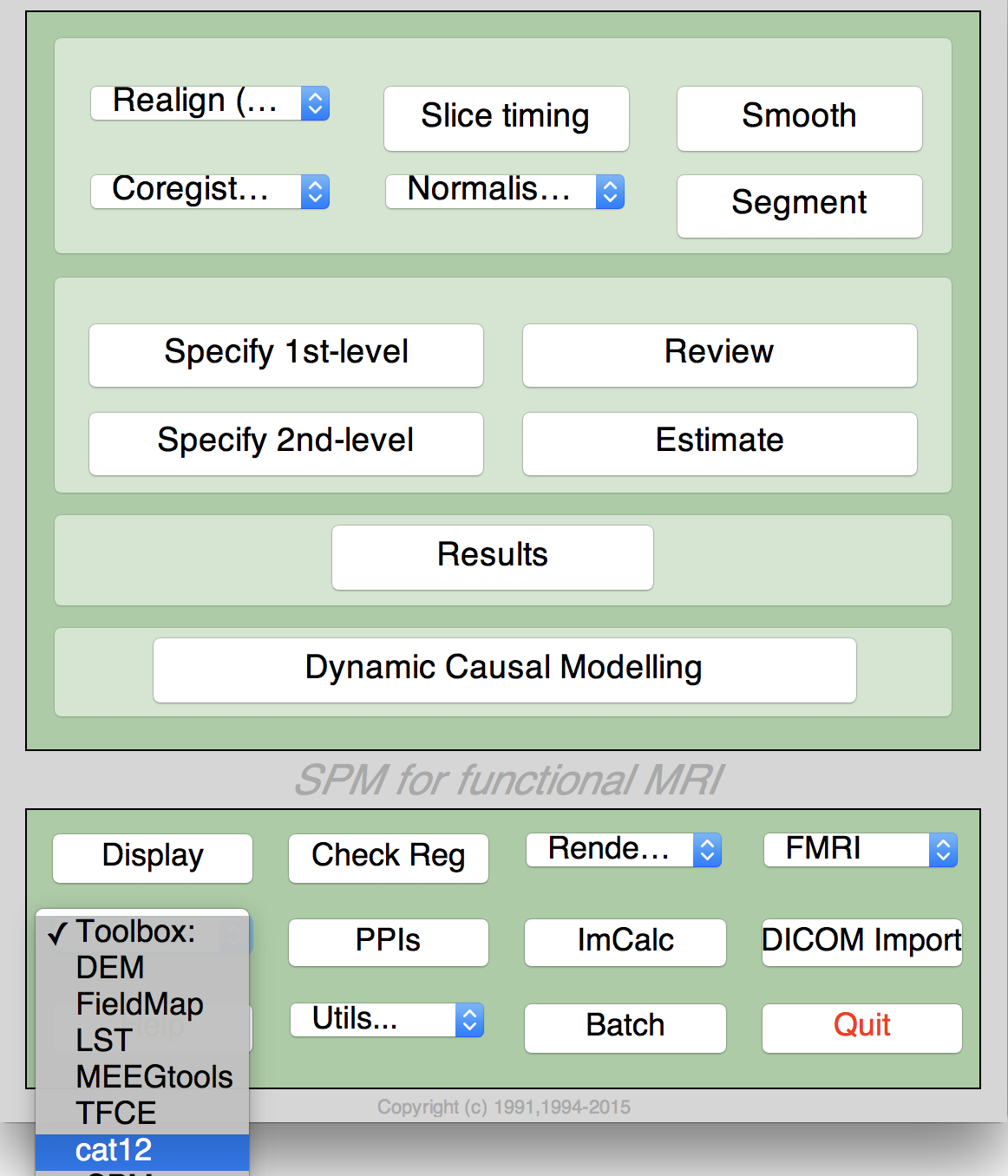

- Start SPM (i.e., type "spm pet")

- Select "CAT" from the SPM menu (see Figure 1). You will find the drop-down menu between the "Display" and the "Help" button (you can also call the Toolbox directly by typing "CAT" on the Matlab command line). This will open the CAT Toolbox as an additional window (Fig. 2).

|

|

Basic VBM analysis (overview)

The CAT Toolbox comes with different modules, which may be used for analysis. Usually, a VBM analysis comprises the following steps:

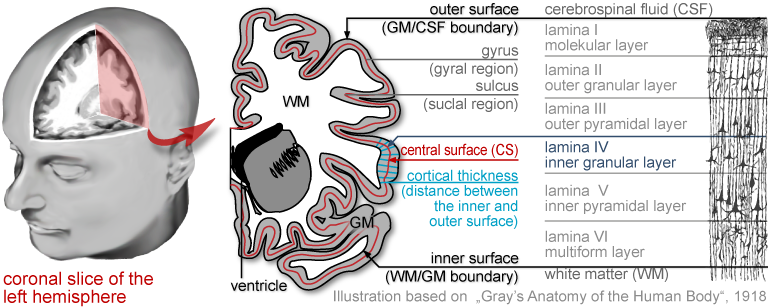

(a) Preprocessing:- T1 images are normalized to a template space and segmented into grey matter (GM), white matter (WM), and cerebrospinal fluid (CSF). The preprocessing parameters can be adjusted via the module "Segment Data".

- After the preprocessing is finished, a quality check is highly recommended. This can be achieved via the modules "Display slices" and "Check sample". Both options are located in the CAT window under "Check Data Quality". Furthermore, quality parameters are estimated and saved in xml-files for each data set during preprocessing. These quality parameters are also printed on the report PDF-page and can be additionally used in the module "Check sample".

- Before entering the GM images into a statistical model, image data needs to be smoothed. Of note, this step is not implemented in the CAT Toolbox but achieved via the standard SPM module "Smooth".

- The smoothed GM images are entered into a statistical analysis. This requires building a statistical model (e.g. t-tests, ANOVAs, multiple regressions). This is done by the standard SPM modules "Specify 2nd Level" or preferably "Basic Models" in the CAT window covering the same function but providing additional options and a simpler interface optimized for structural data.

- The statistical model is estimated. This is done with the standard SPM module "Estimate" (except for surface-based data where the function "Estimate Surface Models" should be used instead).

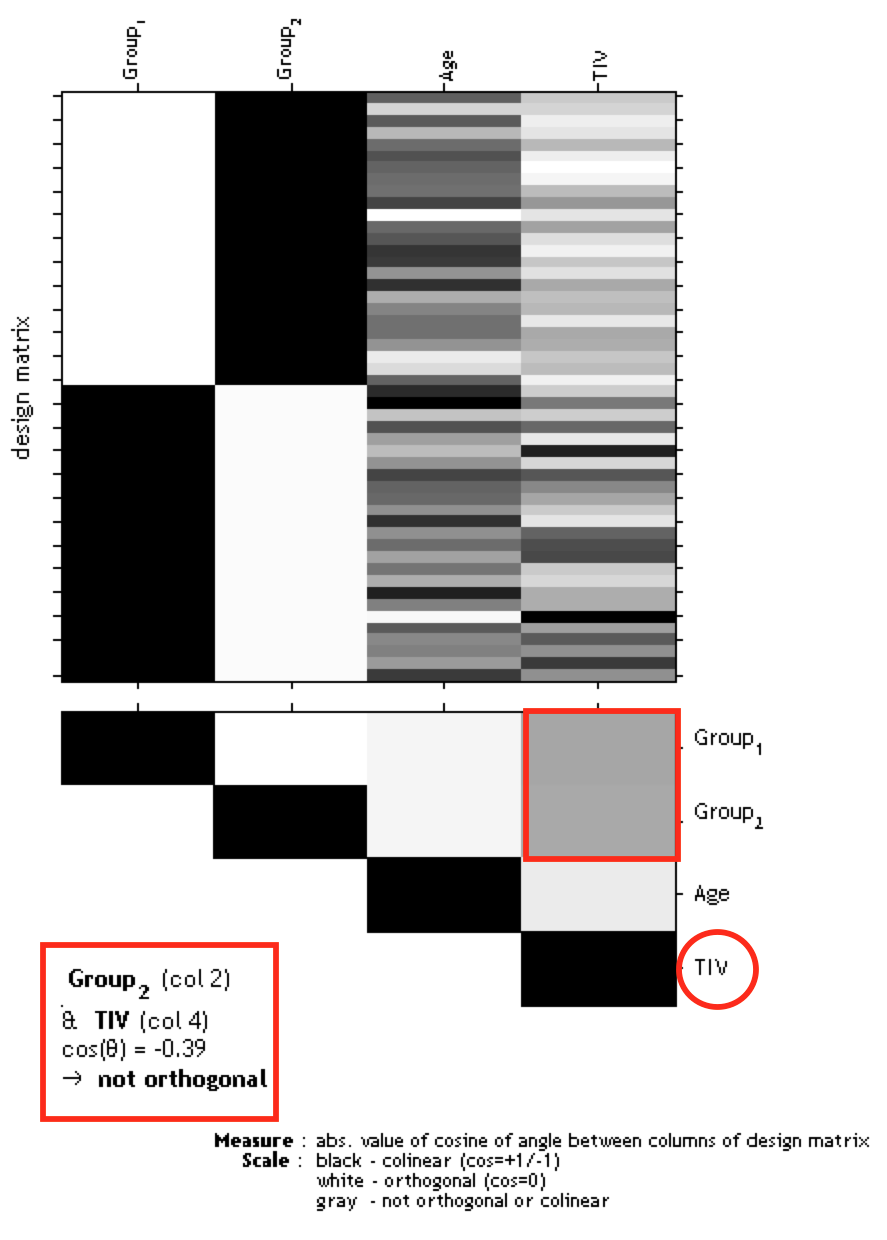

- If you have used total intracranial volume (TIV) as a confound in your model to correct for different brain sizes, it is necessary to check whether TIV shows a considerable correlation with any other parameter of interest and to use global scaling as an alternative approach if needed.

- After estimating the statistical model, contrasts are defined to get the results of the analysis. This is done with the standard SPM module "Results".

The sequence of "preprocessing → quality check → smoothing → statistical analysis" remains the same for every VBM or SBM analysis, even when different steps are adapted (see Processing of Data such as Children).

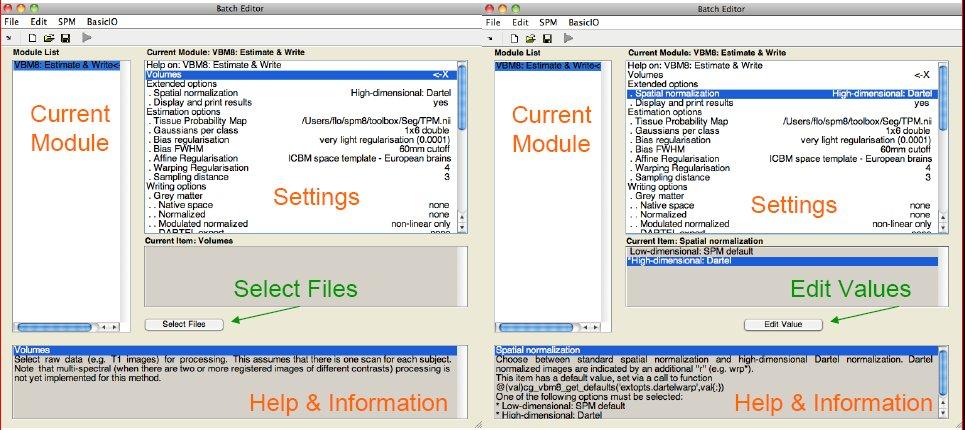

A few words about the Batch Editor...- As soon as you select a module from the CAT Toolbox menu, a new window (the Batch Editor) will open. The Batch Editor is the environment where you will set up your analysis (see Figure 3). For example, an "<-X" indicates where you need to select files (e.g. your image files, the template, etc.). Other parameters have either default settings (which can be modified) or require input (e.g. choosing between different options, providing text or numeric values, etc.).

- Once all missing parameters are set, a green arrow will appear on the top of the window (the current snapshots in Figure 3 show the arrow still in grey). Click this arrow to run the module or select "File → Run Batch". It is very useful to save the settings before you run the batch (click on the disk symbol or select "File → Save Batch").

* Additional CAT-related information can be found by selecting "VBM Website" in the CAT window (Tools → Internet → VBM Website). This will open a website. Here, look for "VBM subpages" on the right.

- Of note, you can always find helpful information and parameter-specific explanations at the bottom of the Batch Editor window*.

- All settings can be saved either as a .mat file or as a .m script file and reloaded for later use. The .m script file has the advantage of being editable with a text editor.

Overview of CAT processing

CAT major processing steps

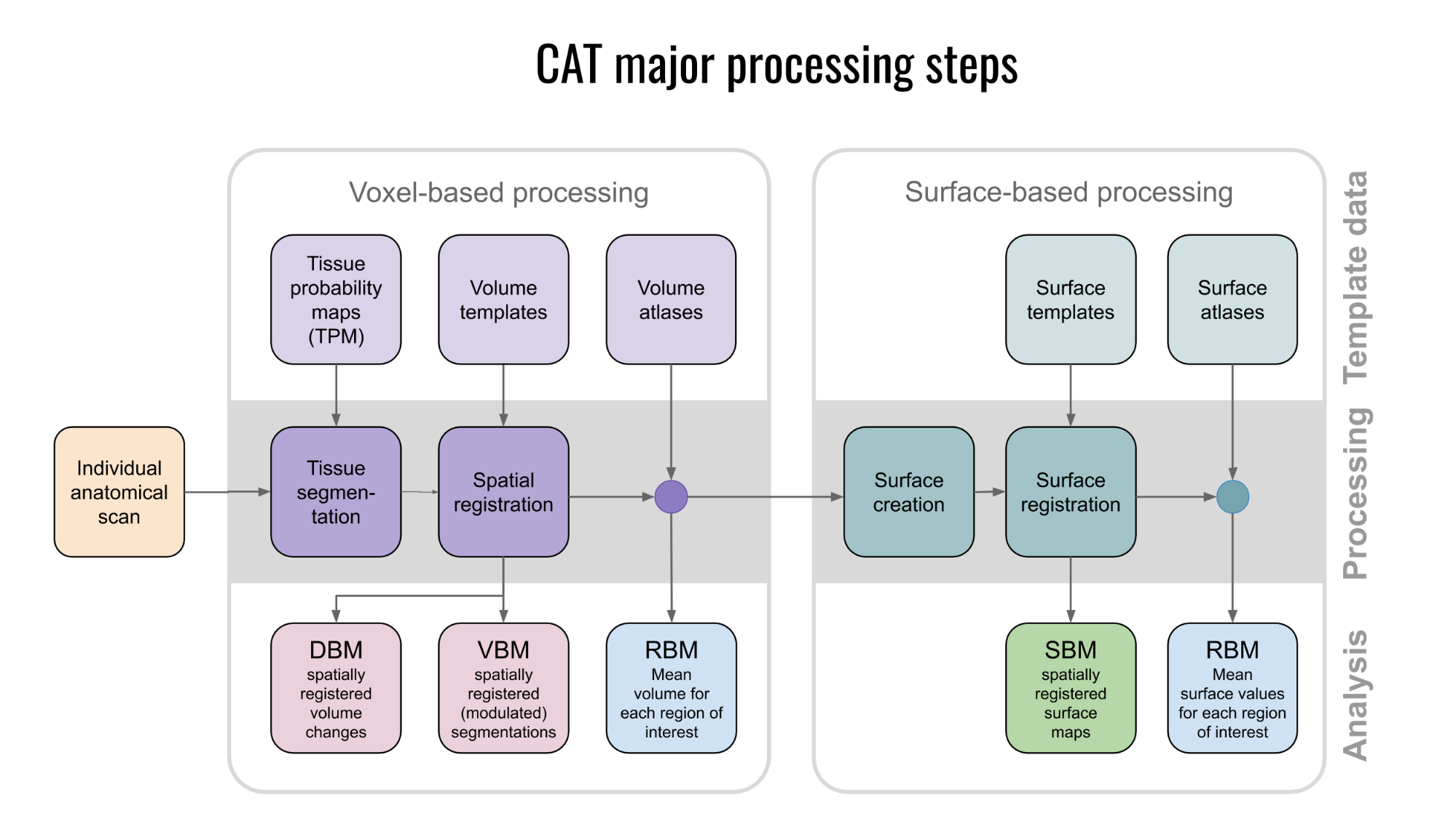

As shown in Figure 4, CAT's processing workflow comprises two main steps: voxel-based processing and surface-based processing. The former is a prerequisite for the latter, but not the other way round. That is, while voxel-based processing is always required for surface-based analyses, users not interested in surface-based analyses can simply omit this second step to save processing time. The "Voxel-based processing" step (Figure 4, left) can be thought of as one module for tissue segmentation and another one for spatial registration. An optional third module allows for the generation of ROIs and the calculation of ROI-based measures. The "Surface-based processing" step (Figure 4, right) can be thought of as one module for surface creation and another one for surface registration. An optional third module allows for the generation of surfaced-based ROIs and the calculation of ROI-based measures. As shown in Figure 4, the different modules utilize different priors, templates, and atlases. Those are briefly explained in the next paragraph.

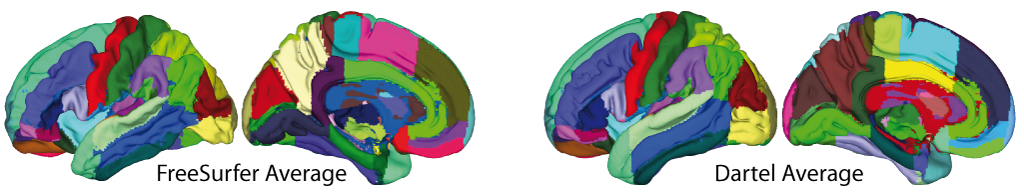

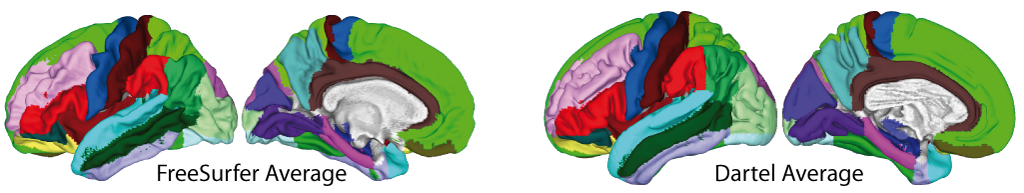

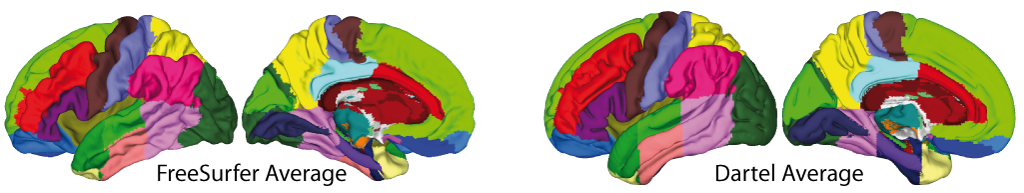

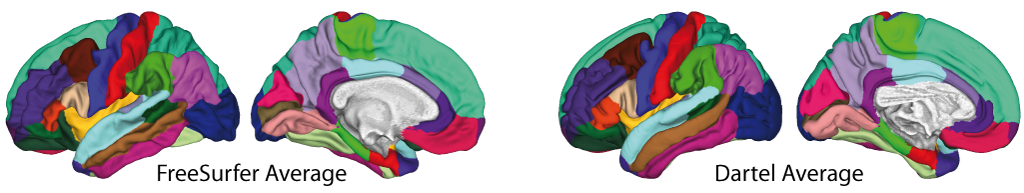

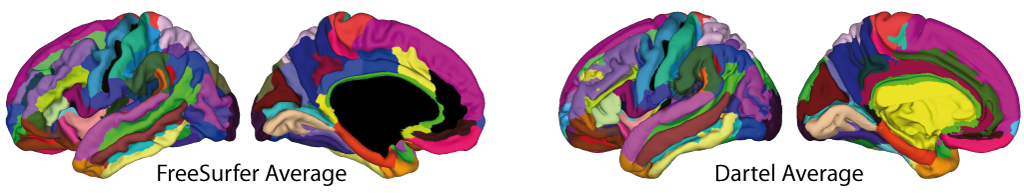

Voxel-based processing: While the final tissue segmentation in CAT is independent of tissue priors, the segmentation procedure is initialized using Tissue Probability Maps (TPMs). The standard TPMs (as provided in SPM) suffice for the vast majority of applications, and customized TPMs are only recommended for data obtained in young children. Please note that these TPMs should contain 6 classes: GM/WM/CSF and 3 background classes. For spatial registration, CAT uses Geodesic Shooting (Ashburner and Friston, 2011) or the older DARTEL approach (Ashburner, 2007) with predefined templates. Those templates are an appropriate choice for most studies and, again, sample-specific Shooting templates may only be advantageous for young children. For the voxel-based ROI analyses, CAT offers a selection of volume-based atlases in the predefined template space. Thus, any atlas-based ROI analysis requires a normalization of the individual scans to CAT's default Shooting template. On that note, the aforementioned creation and selection of a customized (rather than the predefined) DARTEL or Geodesic Shooting template will disable the third module for the voxel-based ROI analysis.

Surface-based processing: During the surface registration, the cortical surfaces of the two hemispheres are registered to the FreeSurfer "FsAverage" template (as provided with CAT). For surface-based ROI analyses, CAT provides a selection of surface-based atlases. As the surface registration module uses the FreeSurfer "FsAverage" template, surface-based ROI analyses are not impacted by any template modification during voxel-based processing. In contrast to voxel-based ROI analyses, surface-based ROI analyses can, therefore, be applied regardless of whether the predefined or customized versions of the DARTEL or Geodesic Shooting template have been used.

CAT processing steps in detail

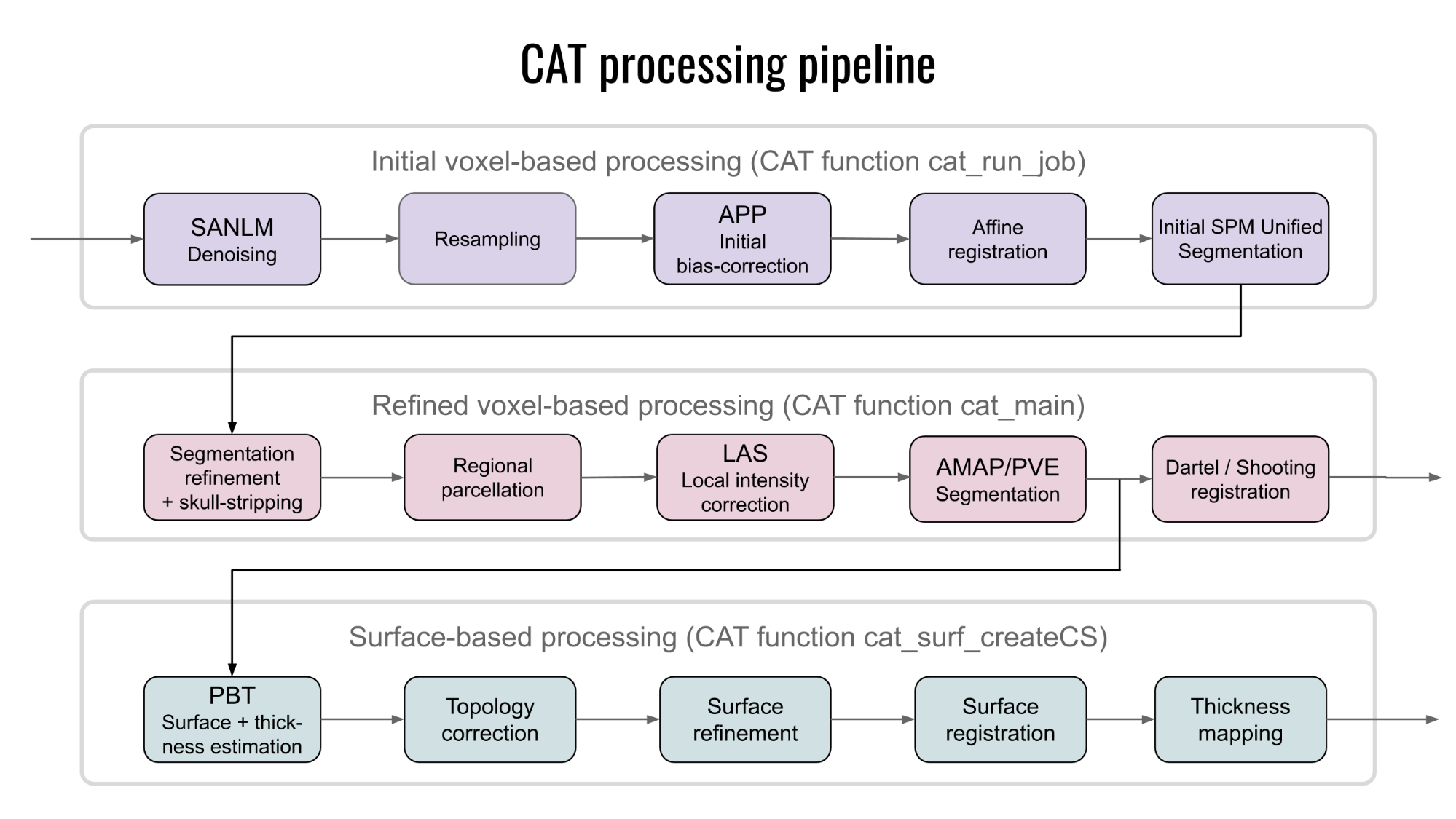

The modules described in the previous section help understand the CAT's overall processing workflow, including its priors, templates, and atlases. Also, data processing in CAT can be separated into three main processes: (1) the initial voxel-based processing, (2) the main voxel-based processing, and (3) the surface-based processing (optional), as further detailed below and visualised in Figure 5.

The "initial voxel-based processing" begins with a spatially adaptive non-local means (SANLM) denoising filter (Manjon et al., 2010), which is followed by internal resampling to properly accommodate low-resolution images and anisotropic spatial resolutions. The data are then bias-corrected and affine-registered (to further improve the outcomes of the following steps) followed by the standard SPM "unified segmentation" (Ashburner and Friston, 2005). The outcomes of the latter step will provide the starting estimates for the subsequent refined voxel-based processing.

The "refined voxel-based processing" uses the output from the unified segmentation and proceeds with skull-stripping of the brain. The brain is then parcellated into the left and right hemisphere, subcortical areas, and the cerebellum. Furthermore, local white matter hyperintensities are detected (to be later accounted for during the spatial normalization and cortical thickness estimation). Subsequently, a local intensity transformation of all tissue classes is performed, which is particularly helpful to reduce the effects of higher grey matter intensities in the motor cortex, basal ganglia, or occipital lobe before the final adaptive maximum a posteriori (AMAP) segmentation. This final AMAP segmentation step (Rajapakse et al., 1997), which does not rely on a priori information of the tissue probabilities, is then refined by applying a partial volume estimation (Tohka et al., 2004), which effectively estimates the fractional content for each tissue type per voxel. As a last default step, the tissue segments are spatially normalized to a common reference space using Geodesic Shooting (Ashburner and Friston, 2011) registrations.

Optionally, the "surface-based processing" will be run following the completion of the voxel-based processing steps. Here, the cortical thickness estimation and reconstruction of the central surface occur in one step using a projection-based thickness (PBT) method (Dahnke et al., 2013). Importantly, this PBT allows the appropriate handling of partial volume information, sulcal blurring, and sulcal asymmetries without explicit sulcus reconstruction (Dahnke et al., 2013). After the initial surface reconstruction, topological defects* are repaired using spherical harmonics (Yotter et al., 2011a). The topological correction is followed by a surface refinement, which results in the final central surface mesh. This mesh provides the basis to extract folding patterns (i.e., based on the position of mesh nodes relative to each other), where the resulting local values (e.g., absolute mean curvature) are projected onto each node. Subsequently, the individual central surfaces are spatially registered to the FreeSurfer "FsAverage" template using a spherical mapping with minimal distortions (Yotter et al., 2011b). In the last step, the local thickness values are transferred onto the FreeSurfer "FsAverage" template. While this last step is performed by default during the surface-based processing, it can be repeated to also transfer measurements of cortical folding (e.g., gyrification) as well as other measurements or data (e.g., functional or quantitative MRI) to the FreeSurfer "FsAverage" template. Note that the spatial registration of cortical measurements is combined with a spatial smoothing step in CAT to prepare the data for statistical analysis.

Preprocessing data

First Module: Segment Data

Please note that additional parameters for expert users are displayed in the GUI if you set the option cat.extopts.expertgui to "1" in cat_defaults.m or call CAT by:

CAT("expert")

CAT → Preprocessing → Segment Data

Parameters:

- Volumes <-X → Select Files → [select the new files] → Done

- Select one volume for each subject. As the Toolbox does not support multispectral data (i.e., different imaging methods for the same brain, such as T1-, T2-, diffusion-weighted, or CT images), it is recommended to choose a T1-weighted image.

- Importantly, the images need to be in the same orientation as the priors; you can double-check and correct them by using "Display" in the SPM menu. The priors are located in your SPM folder (SPM → tpm → TPM.nii).

- Split job into separate processes → [use defaults or modify]

- To use multi-threading, the CAT segmentation job with multiple subjects can be split into separate processes that run in the background. If you don't want to run processes in the background then set this value to 0.

- Keep in mind that each process needs about 1.5–2 GB of RAM, which should be considered when choosing the appropriate number of processes.

- Options for initial SPM affine registration → [use defaults or modify]

- The defaults provide a solid starting point. The SPM tissue probability maps (TPMs) are used for the initial spatial registration and segmentation. Alternatively, customized TPMs can be chosen (e.g. for pediatric data) that were created with the Template-O-Matic (TOM) Toolbox. Please note that these TPMs should contain 6 classes: GM/WM/CSF and 3 background classes.

- Extended options for CAT segmentation → [use defaults or modify]

- Again, the defaults provide a solid starting point. Using the extended options, you can adjust specific parameters or the strength of different corrections ("0" means no correction and "0.5" is the default value that works best for a large variety of data).

- CAT provides a template for the high-dimensional Shooting registration that should work for most data. However, a customized Shooting template can be selected (e.g. for children data) that was created using the Shooting toolbox. For more information on the necessary steps, see the section "Customized Shooting Template".

- Writing options → [use defaults or modify]

- For GM and WM image volumes see information about native, normalized, and modulated volumes.

Note: The default option "Modulated normalized" results in an analysis of relative differences in regional GM volume that have to be corrected for individual brain size in the statistical analysis using total intracranial volume (TIV). - A Bias, noise, and globally intensity corrected T1 image, in which MRI inhomogeneities and noise are removed and intensities are globally normalized, can be written in normalized space. This is useful for quality control and also to create an average image of all normalized T1 images to display/overlay the results.

Note: For a basic VBM analysis, use the defaults. - A partial volume effect (PVE) label image volume can also be written in normalized or native space or as a DARTEL export file. This is useful for quality control.

Note: For a basic VBM analysis, use the defaults. - The Jacobian determinant for each voxel can be written in normalized space. This information can be used to do a Deformation-Based Morphometry (DBM) analysis.

Note: For a basic VBM analysis, this is not needed. - Finally, deformation fields can be written. This option is useful to re-apply normalization parameters to other co-registered images (e.g. fMRI or DTI data).

Note: For a basic VBM analysis, this is not needed.

- For GM and WM image volumes see information about native, normalized, and modulated volumes.

The resulting files are saved in the respective subfolders and follow the CAT naming convention.

Note: If the segmentation fails, this is often due to an unsuccessful initial spatial registration. In this case, you can try to set the origin (anterior commissure) in the Display tool. Place the cursor roughly on the anterior commissure and press "Set Origin". The now displayed correction in the coordinates can be applied to the image with the button "Reorient". This procedure must be repeated for each data set individually.

Second Module: Display Slices (optionally)

CAT → Data Quality → Single Slice Display

Parameters:

- Sample data <-X → Select Files → [select the new files] → Done

- Select the newly written data [e.g. the "wm*" files, which are the normalized bias-corrected volumes for VBM or the resampled and smoothed surfaces for SBM]. This tool will display one horizontal slice for each subject, thus giving a good overview of whether the segmentation and normalization procedures yielded reasonable results. For example, if the native volume had artifacts or if the native volumes had a wrong orientation, the results may look odd. Solutions: Use "Check Reg" from the SPM main menu to make sure that the native images have the same orientation as the MNI Template ("SPM → templates → T1"). Adjust if necessary using "Display" from the SPM main menu.

- Proportional scaling → [use defaults or modify]

- Check "yes" if you display T1 volumes.

- Spatial orientation

- Show slice in mm → [use defaults or modify]

- This module displays horizontal slices. This default setting provides a good overview.

Third Module: Estimate Total Intracranial Volume (TIV)

CAT → Statistical Analysis → Estimate TIV

Parameters:

- XML files <-X → Select Files → [select xml-files] → Done

- Select the xml-files in the report-folder [e.g. the "cat_*.xml"].

- Save values → TIV only

- This option will save the TIV values for each data set in the same order as the selected xml-files. Optionally you can also save the global values for each tissue class, which might be interesting for further analysis, but is not recommended if you are interested in only using TIV as a covariate.

- Output file → [use defaults or modify]

Please note that TIV is strongly recommended as a covariate for all VBM analyses to correct different brain sizes. This step is not necessary for deformation- or surface-based data. Please also make sure that TIV does not correlate too much with your parameters of interest (please make sure that you use "Centering" with "Overall mean", otherwise the check for orthogonality in SPM sometimes does not work correctly). In this case, you should use global scaling with TIV.

Fourth Module: Check Sample Homogeneity

CAT → Data Quality → Check Sample Homogeneity

Parameters:

- Data → New: Sample data <-X → Select Files → [select grey matter volumes] → Done

- Select the newly written data [e.g. the "mwp1*" files, which are the modulated (m) normalized (w) GM segments (p1)]. It is recommended to use the unsmoothed segmentations that provide more anatomical details. This tool visualises the correlation between the volumes using a boxplot and correlation matrices. Thus, it will help to identify outliers. Any outlier should be carefully inspected for artifacts or pre-processing errors using "Check worst data" in the GUI. If you specify different samples the mean correlation is displayed in separate boxplots for each sample.

- Load quality measures (leave empty for autom. search) → [optionally select xml-files with quality measures]

- Optionally select the xml-files that are saved for each data set. These files contain useful information about some estimated quality measures that can also be used for checking sample homogeneity. Please note that the order of the xml-files must be the same as the other data files. Leave empty for automatically searching for these xml-files.

- Global scaling with TIV [use defaults or modify]

- This option is to correct quartic mean Z-scores for TIV by global scaling. It is only meaningful for VBM data.

- Nuisance → [enter nuisance variables if applicable]

- For each nuisance variable which you want to remove from the data before calculating the correlation, select "New: Nuisance" and enter a vector with the respective variable for each subject (e.g. age in years). All variables have to be entered in the same order as the respective volumes. You can also type "spm_load" to upload a *txt file with the covariates in the same order as the volumes. A potential nuisance parameter can be TIV if you check segmented data with the default modulation.

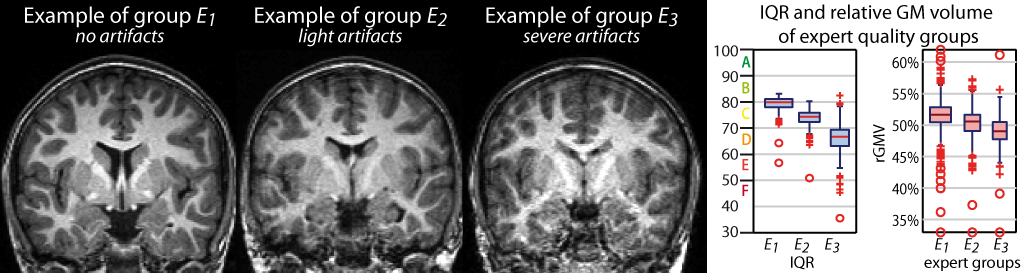

A window opens with a plot in which the quartic mean Z-scores for all volumes are displayed. Higher quartic mean Z-score values mean that your data are less similar to each other. The larger the quartic mean Z-score, the more deviant the data are from the sample mean. The reason we apply a power of 4 to the z-score (quartic) is to give outliers a greater weight and make them more obvious in the plot. If you click in the plot, the corresponding data are displayed in the lower right corner and allows a closer look. The slider below the image changes the displayed slice. The pop-up menus in the top right-hand corner provide more options. Here you can select other measures that are displayed in the boxplot (e.g. optional quality measures such as noise, bias, weighted overall image quality if these values were loaded). Finally, the most deviant data can be displayed in the SPM graphics window to check the data more closely. For surfaces, two additional measures are available (if you have loaded the XML files) to assess the quality of the surface extraction. The Euler number gives you an idea about the number of topology defects, while the defect size indicates how many vertices of the surface are affected by topology defects. Because topology defects are mainly caused by noise and other image artifacts (e.g. motion), this gives you an impression about the potential quality of your extracted surface.

The boxplot in the SPM graphics window displays the quartic mean Z-score values for each subject and indicates the homogeneity of your sample. A high quartic mean Z-score in the boxplot does not always mean that this volume is an outlier or contains an artifact. If there are no artifacts in the image and if the image quality is reasonable, you don't have to exclude this volume from the sample. This tool is intended to support the process of quality checking and there are no clear criteria defined to exclude a volume based only on the quartic mean Z-score value. However, volumes with a noticeably higher quartic mean Z-score (e.g. above two standard deviations) are indicated and should be checked more carefully. The same holds for all other measures. Data with deviating values do not necessarily have to be excluded from the sample, but these data should be checked more carefully with the "Check most deviating data" option. The order of the most deviating data is changed for every measure separately. Thus, the check for the most deviating data will give you a different order for mean correlation and other measures, which allows you to judge your data quality using different features.

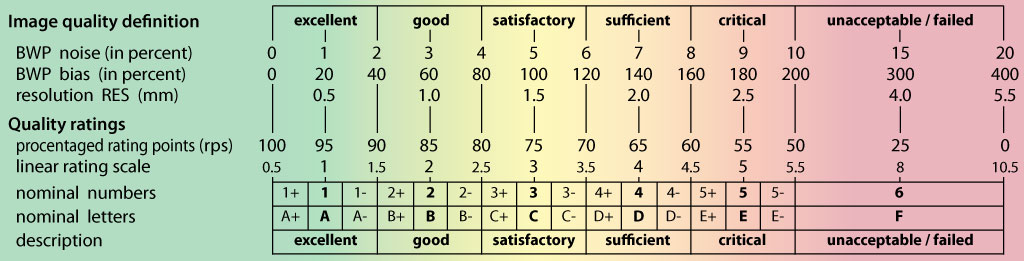

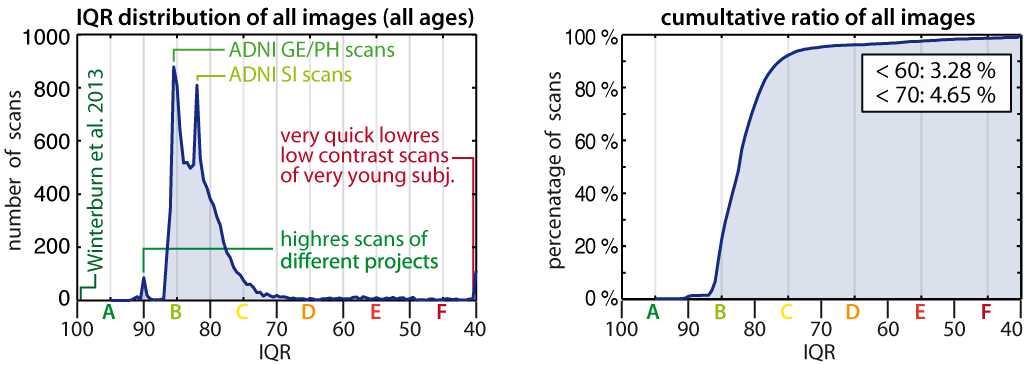

If you have loaded quality measures, you can also display the ratio between weighted overall image quality (IQR) and quartic mean Z-score. These two are the most important measures for assessing image quality. Mean Z-score measures the homogeneity of your data used for statistical analysis and is therefore a measure of image quality after pre-processing. Data that deviate from your sample increase variance and therefore minimize effect size and statistical power. The weighted overall image quality, on the other hand, combines measurements of noise and spatial resolution of the images before pre-processing. Although CAT uses effective noise-reduction approaches (e.g. spatial adaptive non-local means filter) pre-processed images are also affected and should be checked.

The product between IQR and quartic mean Z-scores makes it possible to combine these two measures of image quality before and after pre-processing. A low ratio indicates good quality before and after preprocessing and means that IQR is highly rated (resulting in a low nominal number/grade) and/or quartic mean Z-score is high. Both measures contribute to the ratio and are normalized before by their standard deviation. In the respective plot, the product value is colour-coded and each point can be selected to get the filename and display the selected slice to check data more closely.

Fifth Module: Smooth

SPM menu → Smooth

Parameters:

- Images to Smooth <-X → Select Files → [select grey matter volumes] → Done

- Select the newly written data [e.g. the "mwp1" files, which are the modulated (m) normalized (w) grey matter segments (p1)].

- FWHM → [use defaults or modify]

- 6–8 mm kernels are widely used for VBM. To use this setting select "edit value" and type "6 6 6" for a kernel with 6 mm FWHM.

- Data Type → [use defaults or modify]

- Filename Prefix → [use defaults or modify]

Building the statistical model

Starting with CAT12.8, a new batch mode is used to create statistical models. Now, only cross-sectional and longitudinal data are distinguished and only factorial designs and a few other necessary models are offered, but they cover all possible designs. The advantage of this new batch is that it provides tailored options for VBM and many of the rather confusing and unnecessary options in "Basic Models" or "Specify 2nd-level" have now been removed and some VBM-specific options such as correction for TIV are newly provided. This should make it easier to build the statistical model in CAT. However, the "Full factorial" design now used for cross-sectional data is a bit more complicated to use. Still, you are free to use the old statistical batch from the SPM GUI ("Basic Models" or "Specify 2nd-level") as an alternative.

Here are some hints on how to relate the old models to the new factorial design. For cross-sectional VBM data, you usually have 1–n samples (which corresponds to factor levels) and optionally covariates and nuisance parameters:

|

Number of factor levels |

Number of covariates |

Statistical Model |

|

1 |

0 |

one-sample t-test |

|

1 |

1 |

single regression |

|

1 |

>1 |

multiple regression |

|

2 |

0 |

two-sample t-test |

|

>2 |

0 |

ANOVA |

|

>1 |

>0 |

ANCOVA (for nuisance parameters) or Interaction (for covariates) |

General notes and options for statistical models

Please note that when using "Basic Models" in the CAT GUI, some unnecessary options have been removed compared to the "Basic Models" in SPM, and some useful options have been added. We therefore strongly recommend preferring to use the models in CAT, which offer some advantages especially when defining longitudinal designs.Covariates

This option allows for the specification of covariates and nuisance variables. TIV correction should instead be defined in "Correction of TIV".

Note that SPM does not make any distinction between effects of interest (including covariates) and nuisance effects. Covariates and nuisance parameters are handled in the same way in the GLM and differ only in the contrast used.

- Covariates → New Covariate

- Vector <-X → enter the values of the covariates (e.g. age in years) in the same order as the respective file names or type "spm_load" to upload a *.txt file with the covariates in the same order as the volumes

- Name <-X Specify Text (e.g. “age”)

- Interactions → None (or optionally "With Factor 1" to account for differences between the groups)

- Centering → Overall mean

Absolute masking is recommended for GM or WM volume data to ensure that you don’t analyse regions that don’t contain enough GM or WM or regions outside the brain. The default value of 0.1 is a good starting point for VBM data to ensure that only the intended tissue map is analysed. You can gradually increase this value up to "0.2" but you must make sure that your effects are not cut off.

If you analyse surface data, no additional masking is necessary.

For modulated VBM data, it is strongly recommended to always correct for TIV to account for different brain sizes. There are three options for TIV correction available:

- ANCOVA: Here, any variance that can be explained by TIV is removed from your data (i.e. in every voxel or vertex). This is the preferred option when there is no correlation between TIV and your parameter of interest. For example, a parameter of interest may be a variable in a multiple regression design that you want to relate to your structural data. If TIV is correlated with this parameter, not only will the variance explained by TIV be removed from your data, but also parts of the variance of your parameter of interest, which should be avoided.

Note that for two-sample T-tests and Anova with more than 2 groups, an interaction is modeled between TIV and group that prevents any group differences from being removed from your data, even if TIV differs between your groups.

Use the Check Design Orthogonality option to test for any correlations between your parameters. - Global scaling: If TIV correlates with your parameter of interest, you should rather use global scaling with TIV. The easiest way to define global scaling is to use "Basic Models" in the CAT GUI. If you prefer "Basic Models" in SPM, see here for a detailed description how to define global scaling.

- No: Use this option to disable TIV correction for deformation- or surface-based data where TIV correction is not necessary.

In order to identify images with poor image quality or even artefacts, you can use Check Sample Homogeneity. The idea of this option is to check the Z-score of all files across the sample using the files that are already defined in SPM.mat.

The Z-score is calculated for all images and the mean for each image is plotted using a boxplot (or violin plot) and the indicated filenames. The larger the quartic mean Z-score, the more deviant the data are from the sample mean. The reason we apply a power of 4 to the z-score (quartic) is to give outliers a greater weight and make them more obvious in the plot. In the plot, outliers from the sample are usually isolated from the majority of data which are clustered around the sample mean. The quartic mean Z-score is plotted on the y-axis and the x-axis reflects the data order.

The advantage of re-checking sample homogeneity at this point is that the given statistical model (design) is used and potential nuisance parameters are taken into account. If you have longitudinal data, the time points of each data set are linked in the graph to indicate intra-subject data. Unsmoothed data (if available and in the same folder) are automatically detected and used. Finally, report files are used if present (i.e., if data has not been moved or the folders renamed) and quality parameters are loaded and displayed.

If you have modeled TIV as a nuisance parameter, you must check whether TIV is orthogonal (in other words independent) to any other parameter of interest in your analysis (e.g. parameters you are testing for) using Check Design Orthogonality. See here for a detailed description.

This experimental option allows the specification of a voxel-wise covariate. If you have more than one modality (i.e., functional and structural data), this can be used (depending on the contrast you define) to:

- Remove the confounding effect of structural data (e.g. GM) on functional data or

- Investigate the relationship (regression) between functional and structural data.

In addition, an interaction can be modeled to examine whether the regression between functional and structural data differs between two groups.

Please note that the saved vSPM.mat file can only be analysed with the TFCE toolbox r221 or newer.

There exist different options to call results after statistical analysis:

- General function to call results

- Slice Overlay to display volume results (i.e. VBM)

- Surface Overlay that allows to overlay results from surfaces or volumes

Two-sample T-Test

CAT → Basic Models

Parameters:

- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Two-sample t-test"

- Group 1 scans → Select Files → [select the smoothed grey matter data for group 1; following this script these are the "smwp1" files] → Done

- Group 2 scans → Select Files → [select the smoothed grey matter data for group 2] → Done

- Independence → Yes

- Variance → Equal or Unequal

- Covariates

- Masking

- Threshold masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Full factorial model (for a 2x2 Anova)

CAT → Statistical Analysis → Basic Models

Parameters:- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Any cross-sectional data (Full factorial)"

- Factors → "New: Factor; New: Factor"

Factor- Name → [specify text (e.g. "sex")]

- Levels → 2

- Independence → Yes

- Variance → Equal or Unequal

- Name → [specify text (e.g. "age")]

- Levels → 2

- Independence → Yes

- Variance → Equal or Unequal

- Specify Cells → "New: Cell; New: Cell; New: Cell; New: Cell"

Cell- Levels → [specify text (e.g. "1 1")]

- Scans → [select files (e.g. the smoothed GM data of the young males)]

- Levels → [specify text (e.g. "1 2")]

- Scans → [select files (e.g. the smoothed GM data of the old males)]

- Levels → [specify text (e.g. "2 1")]

- Scans → [select files (e.g. the smoothed GM data of the young females)]

- Levels → [specify text (e.g. "2 2")]

- Scans → [select files (e.g. the smoothed GM data of the old females)]

- Factors → "New: Factor; New: Factor"

- Covariates* (see the text box in example for two-sample T-test)

- Masking

- Threshold Masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Multiple regression (linear)

CAT → Statistical Analysis → Basic Models

Parameters:- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Multiple Regression"

- Scans → [select files (e.g. the smoothed GM data of all subjects)] → Done

- Covariates → "New: Covariate"

- Vector → [enter the values in the same order as the respective file names of the smoothed images]

- Name → [specify (e.g. "age")]

- Centering → Overall mean

- Intercept → Include Intercept

- Covariates

- Masking

- Threshold masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Multiple regression (polynomial)

To use a polynomial model, you need to estimate the polynomial function of your parameter before analysing it. To do this, use the function cat_stat_polynomial (included with CAT >r1140):

y = cat_stat_polynomial(x,order)

where "x" is your parameter and "order" is the polynomial order (e.g. 2 for quadratic).

Example for polynomial order 2 (quadratic)

CAT → Statistical Analysis → Basic Models

Parameters:- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Multiple Regression"

- Scans → [select files (e.g. the smoothed GM data of all subjects)] → Done

- Covariates → "New: Covariate"

- Vector → [specify linear term (e.g. "y(:,1)")]

- Name → [specify (e.g. "age-linear")]

- Centering → Overall mean

- Covariates → "New: Covariate"

- Vector → [specify quadratic term (e.g. "y(:,2)")]

- Name → [specify (e.g. "age-quadratic")]

- Centering → Overall mean

- Intercept → Include Intercept

- Covariates

- Masking

- Threshold masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Full factorial model (interaction)

CAT → Statistical Analysis → Basic Models

Parameters:- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Any cross-sectional data (Full factorial)"

- Factors → "New: Factor"

Factor- Name → [specify text (e.g. "sex")]

- Levels → 2

- Independence → Yes

- Variance → Equal or Unequal

- Specify Cells → "New: Cell; New: Cell"

Cell- Levels → [specify text (e.g. "1")]

- Scans → [select files (e.g. the smoothed GM data of the males)]

- Levels → [specify text (e.g. "2")]

- Scans → [select files (e.g. the smoothed GM data of the females)]

- Factors → "New: Factor"

- Covariates → "New: Covariate"

- Covariate

- Vector → [enter the values in the same order as the respective file names of the smoothed images]

- Name → [specify (e.g. "age")]

- Interactions → With Factor 1

- Centering → Overall mean

- Covariate

- Masking

- Threshold Masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Full factorial model (polynomial interaction)

To use a polynomial model you have to estimate the polynomial function of your parameter prior to the analysis. Use the function cat_stat_polynomial (provided with CAT >r1140) for that purpose:

y = cat_stat_polynomial(x,order)

where "x" is your parameter and "order" is the polynomial order (e.g. 2 for quadratic).

Example for polynomial order 2 (quadratic)

CAT → Statistical Analysis → Basic Models

Parameters:- Directory <-X → Select Files → [select the working directory for your analysis] → Done

- Design → "Any cross-sectional data (Full factorial)"

- Factors → "New: Factor"

Factor- Name → [specify text (e.g. "sex")]

- Levels → 2

- Independence → Yes

- Variance → Equal or Unequal

- Specify Cells → "New: Cell; New: Cell"

Cell- Levels → [specify text (e.g. "1")]

- Scans → [select files (e.g. the smoothed GM data of the males)]

- Levels → [specify text (e.g. "2")]

- Scans → [select files (e.g. the smoothed GM data of the females)]

- Factors → "New: Factor"

- Covariates → "New: Covariate; New: Covariate"

- Covariate

- Vector → [specify linear term (e.g. "y(:,1)")]

- Name → [specify (e.g. "age-linear")]

- Interactions → With Factor 1

- Centering → Overall mean

- Covariate

- Vector → [specify quadratic term (e.g. "y(:,2)")]

- Name → [specify (e.g. "age-quadratic")]

- Interactions → With Factor 1

- Centering → Overall mean

- Covariate

- Masking

- Threshold Masking → Absolute → [specify a value (e.g. "0.1")]

- Implicit Mask → Yes

- Explicit Mask → <None>

- Correction of TIV → ANCOVA, Global scaling, or No

- Check design orthogonality and homogeneity → [use defaults]

Estimating the statistical model

CAT → Estimate (Surface) Models

Select SPM.mat which you just built.

Note that for surface data you should rather use this estimation option instead of "Estimate" in the SPM GUI, otherwise the underlying surface after calling the results would mistakenly be the surface of the first selected data set instead of the average surface.Checking for design orthogonality

If you have modeled TIV as a nuisance parameter, you must check whether TIV is orthogonal (in other words independent) to any other parameter of interest in your analysis (e.g. parameters you are testing for). This means that TIV should not correlate with any other parameter of interest, otherwise not only the variance explained by TIV is removed from your data, but also parts of the variance of your parameter of interest.

Please use "Overall mean" as "Centering" for the TIV covariate. Otherwise, the orthogonality check sometimes even indicates a meaningful co-linearity only because of scaling issues.

To check the design orthogonality, you have 3 options:

- Use the respective option in Basic Models in CAT.

- Use the Check design orthogonality and homogeneity function in CAT

- Use the Review function in the SPM GUI and select Design orthogonality in the menu.

|

The larger the correlation between TIV and any parameter of interest, the more cautious one must be when using TIV as a nuisance parameter. In this case an alternative approach is to use global scaling with TIV. If you use Basic models in the SPM GUI, the settings below for this approach can be used.

Please note that when using "Basic Models" in the CAT GUI, the global scaling option prepares that for you, which eases the process and is the recommended way, and the three steps in the next list are not needed.- Global Calculation → User → Global Values <-X → Define the TIV values here

- Global Normalisation → Overall grand mean scaling → Yes → Grand mean scaled value → Define here the mean TIV of your sample (or as approximation a value of 1500 which might fit for the majority of data from adults)

- Normalisation → Proportional

This approach scales the data (proportionally) according to the individual TIV values. Instead of removing variance explained by TIV, all data are scaled by their TIV values. This is also similar to the approach that was used in VBM8 ("modulate for non-linear effects only"), where global scaling was internally used with an approximation of TIV (e.g. inverse of affine scaling factor).

Please note that global normalization also affects the absolute masking threshold as your images are now scaled to the "Grand mean scaled value" of the Global Normalization option. If you have defined the mean TIV of your sample (or an approximate value of 1500) here, no change of the absolute threshold is required. Otherwise, you will have to correct the absolute threshold because your values are now globally scaled to the "Grand mean scaled value" of the Global Normalization option.

An alternative approach for all models with more than one group should not be unmentioned. This is to use TIV as a nuisance parameter with interaction and mean centering with the factor "group". This interaction is automatically taken into account when you use the TIV ANCOVA correction in CAT Basic Models. That approach will result in separate columns of TIV for each group. The values for the nuisance parameters are here mean-centered for each group. As the mean values for TIV are corrected separately for each group, it is prevented that group differences in TIV have a negative impact on the group comparison of the image data in the GLM. Variance explained by TIV is here removed separately for each group without affecting the differences between the groups.

Unfortunately, no clear advice for either of these two approaches can be given, and the use of these approaches will also affect the interpretation of your findings.

Defining contrasts

SPM menu → Results → [select the SPM.mat file] → Done (this opens the Contrast Manager) → Define new contrast (i.e., choose "t-contrast" or "F-contrast"; type the contrast name and specify the contrast by typing the respective numbers, as shown below).

You can also use the SPM Batch Editor to automate that procedure:

SPM Batch Editor → SPM → Stats → Contrast Manager

Please note that all zeros at the end of the contrast don't have to be defined, but are sometimes retained for didactic reasons.

|

Two-sample T-test |

|

|

T-test |

|

|

1 -1 0 |

|

-1 1 0 |

|

F-test |

|

|

eye(2)-1/2 |

|

eye(2) |

|

2x2 ANOVA |

|

|

T-test |

|

|

1 -1 0 0 0 |

|

0 0 1 -1 0 |

|

1 0 -1 0 0 |

|

0 1 0 -1 0 |

|

1 1 -1 -1 0 |

|

1 -1 1 -1 0 |

|

1 -1 -1 1 0 |

|

-1 1 1 -1 0 |

|

F-test |

|

|

1 -1 0 0 0 |

|

0 0 1 -1 0 |

|

1 0 -1 0 0 |

|

0 1 0 -1 0 |

|

1 1 -1 -1 0 |

|

1 -1 1 -1 0 |

|

1 -1 -1 1 0 |

|

eye(4)-1/4 |

|

eye(4) |

|

Multiple Regression (Linear) |

|

|

T-test |

|

|

0 0 1 |

|

0 0 -1 |

|

F-test |

|

|

0 0 1 |

|

The two leading zeros in contrast indicate the constant (sample effect, 1st column in the design matrix) and TIV (2nd column in the design matrix). If no additional covariate such as TIV is defined you must skip one of the leading zeros (e.g. "0 1" and "0 -1"). |

|

|

Multiple Regression (Polynomial) |

|

|

T-test |

|

|

0 0 1 0 |

|

0 0 0 1 |

|

0 0 -1 0 |

|

0 0 0 -1 |

|

F-test |

|

|

0 0 1 0 |

|

0 0 0 1 |

|

[zeros(2,2) eye(2)] |

|

The two leading zeros in the contrast indicate the constant (sample effect, 1st column in the design matrix) and TIV (2nd column in the design matrix). If no additional covariate such as TIV is defined you must skip one of the leading zeros (e.g. "0 1"). |

|

|

Interaction (Linear) |

|

|

T-test |

|

|

0 0 1 -1 0 |

|

0 0 -1 1 0 |

|

F-test |

|

|

0 0 1 -1 0 |

|

[zeros(2,2) eye(2)] |

|

The two leading zeros in the contrast indicate the main effect "group" and should be always set to "0" in the contrast, because we are rather interested in the interaction. |

|

|

Interaction (Polynomial) |

|

|

T-test |

|

|

0 0 1 -1 0 0 0 |

|

0 0 -1 1 0 0 0 |

|

0 0 0 0 1 -1 0 |

|

0 0 0 0 -1 1 0 |

|

F-test |

|

|

0 0 1 -1 0 0 0 |

|

0 0 0 0 1 -1 0 |

|

0 0 1 -1 0 0 0 |

|

[zeros(4,2) eye(4)] |

The two leading zeros in the contrast indicate the main effect "group" and should be always set to "0" in the contrast, because we are rather interested in the interaction.

Defining F-contrasts

For Anovas (e.g. comparison between groups) the test for any difference between the groups can be defined with:

eye(n)-1/n

where n is the number of columns of interest.

For interaction effects (e.g. any interaction between covariate and group) you need to add leading zeros to leave out the group effects:

[zeros(n,m) eye(n)-1/n]

where m is the number of group effects.

For a regression design (e.g. any regression slope) you are not interested in differential effects as described above, but rather in any effect of a regression parameter:

[zeros(n,m) eye(n)]

where n is the number of columns of interest (e.g. regression parameters) and m is the number of leading columns you need to exclude (i.e. effects of no interest such as the constant or any other covariates of no interest).

In order to plot any contrast estimate or fitted response, however, you have to define the effects of interest by using:

[zeros(n,m) eye(n)]

where n is the number of columns of interest and m is the number of leading columns you need to exclude (i.e. effects of no interest such as the constant or any other covariates of no interest).

This F-contrast (effects of interest) is necessary to correctly plot contrast estimates or fitted responses. Thus, it's recommended to always define this contrast.

Getting Results

SPM menu → Results → [select a contrast from Contrast Manager] → Done

- Mask with other contrasts → No

- Title for comparison: [use the pre-defined name from the Contrast Manager or change it]

- P value adjustment to:

- None (uncorrected for multiple comparisons), set threshold to 0.001

- FDR (false discovery rate), set threshold to 0.05, etc.

- FWE (family-wise error), set threshold to 0.05, etc.

- Extent threshold: (either use "none" or specify the number of voxels)*

CAT for longitudinal data

Background

The majority of morphometric studies are based on cross-sectional data in which one image is acquired for each subject. However, to map structural changes over time (e.g., in the framework of use-dependent plasticity, neurodevelopment, or ageing), longitudinal designs that consider additional time points for each subject are necessary. The handling of these longitudinal data requires a customized processing, as a voxel- or point-wise comparability needs to be assured not only across subjects, but also across time points within subjects. This requires an inverse-consistent (or "symmetric") realignment as well as an intra-subject bias field correction (Ashburner and Ridgway, 2012; Reuter et al., 2010; Reuter and Fischl, 2011). Thus, CAT first realigns the images from all time points using inverse-consistent rigid-body registrations and applies intra-subject bias-field corrections. Subsequently, the resulting images are processed individually (see Figure 4, 5) using the standard pipeline.